Spotlight

Netflix for Desktop

Exploring new stylescapes

Designed a voice UI by applying a communication-driven design process. This voice UI is information-based and helped solve a unique problem identified and determined by the team before proceeding to the research phase.

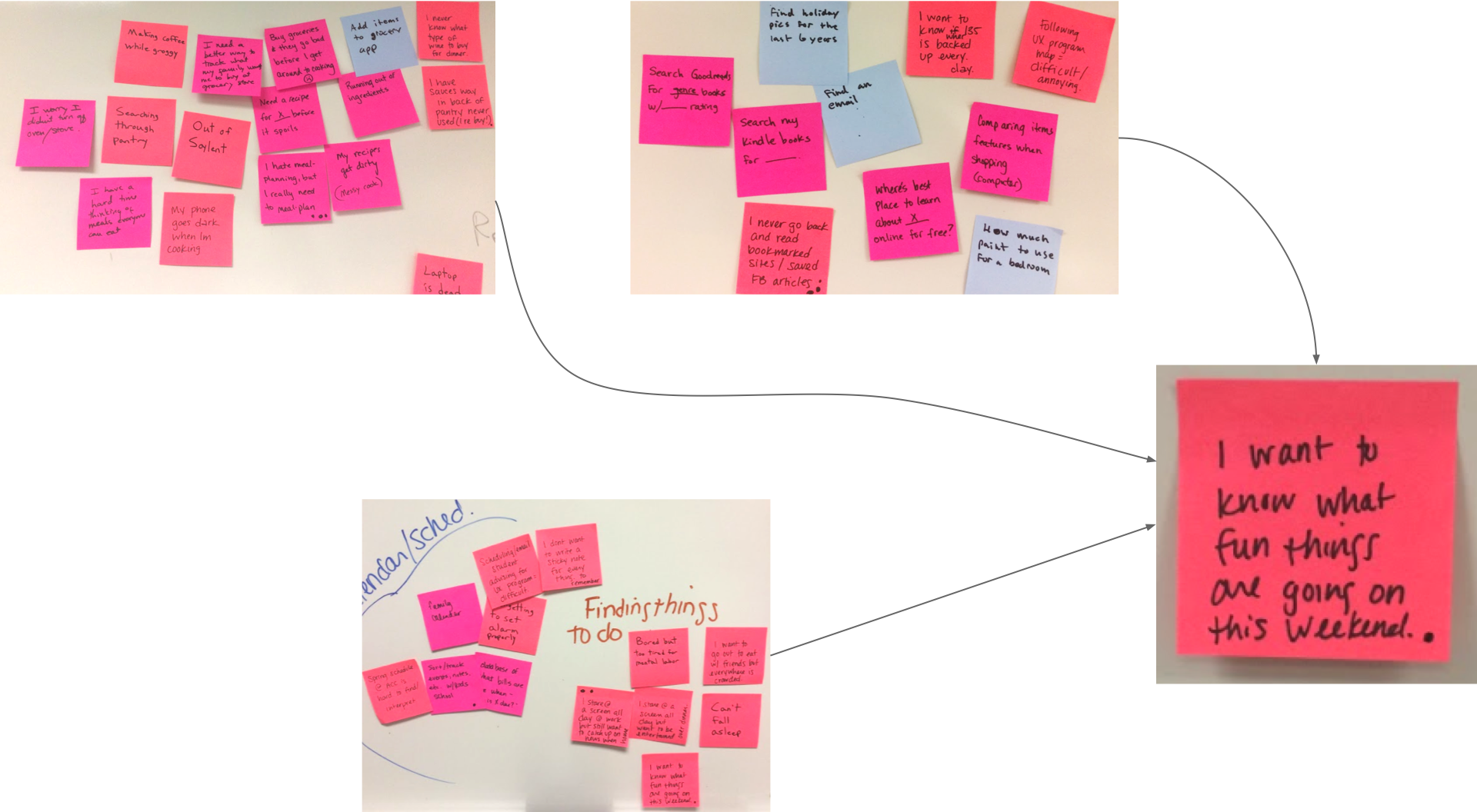

This was a team project that was specifically targeted around developing a working Voice UI (VUI). An intense brainstorm session helped me and team identify and list interesting ideas, questions, point of views, all leading to ways in which a VUI can help our user in a certain context. Next affinity mapping helped group similar topics together and patterns emerged. These patterns helped define our chosen problem.

'I want to know what fun things are going on this weekend'

What vooeys can do vs what users are actually using them for, are two different things! So we decided to start simple and help both user & VUI accomplish one single task well.

While it’s useful to know how users are generally using voice, it was important for us as UX designers to conduct our own user research specific to the VUI app that we were designing. Hence we began with research goals.

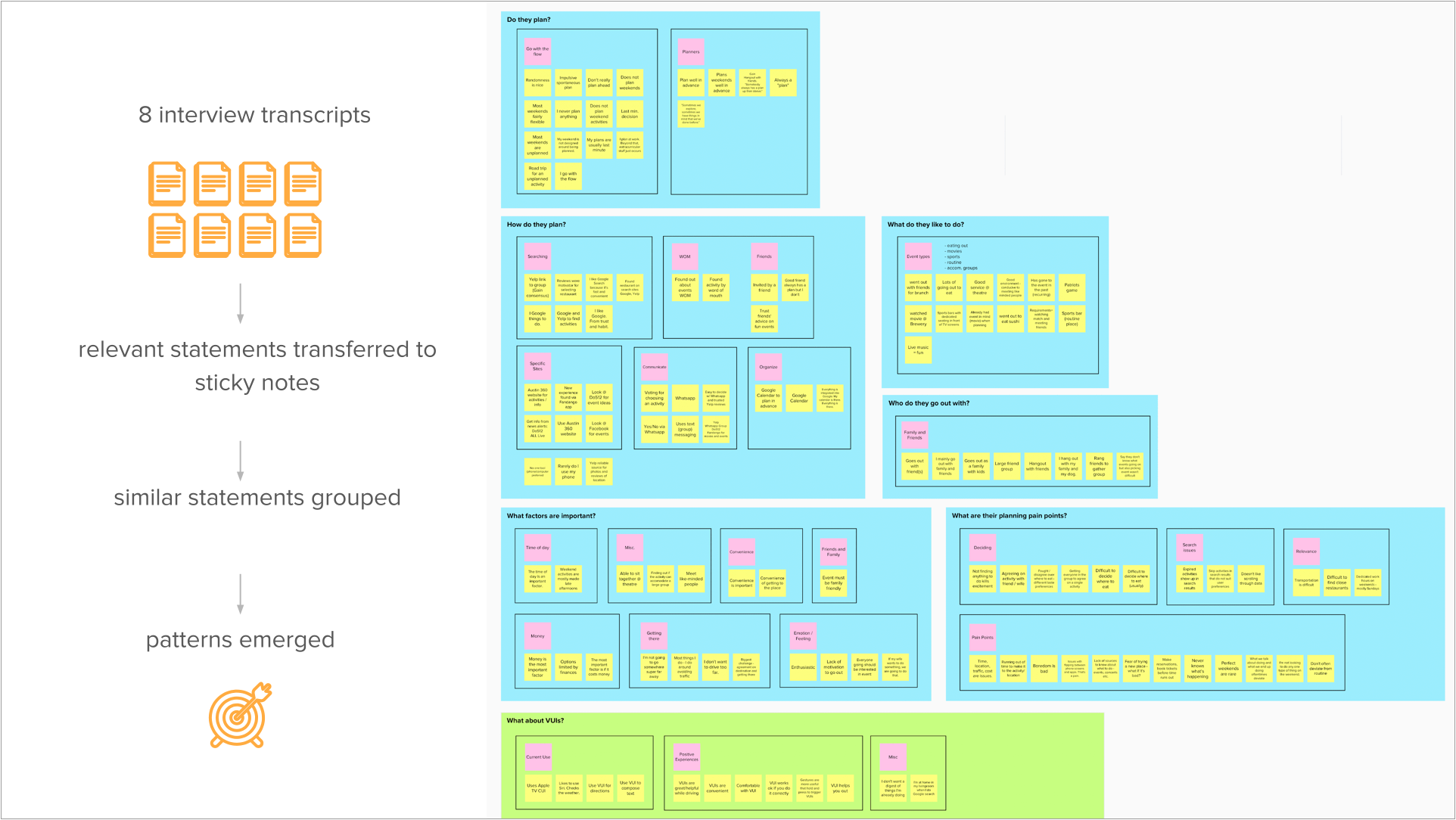

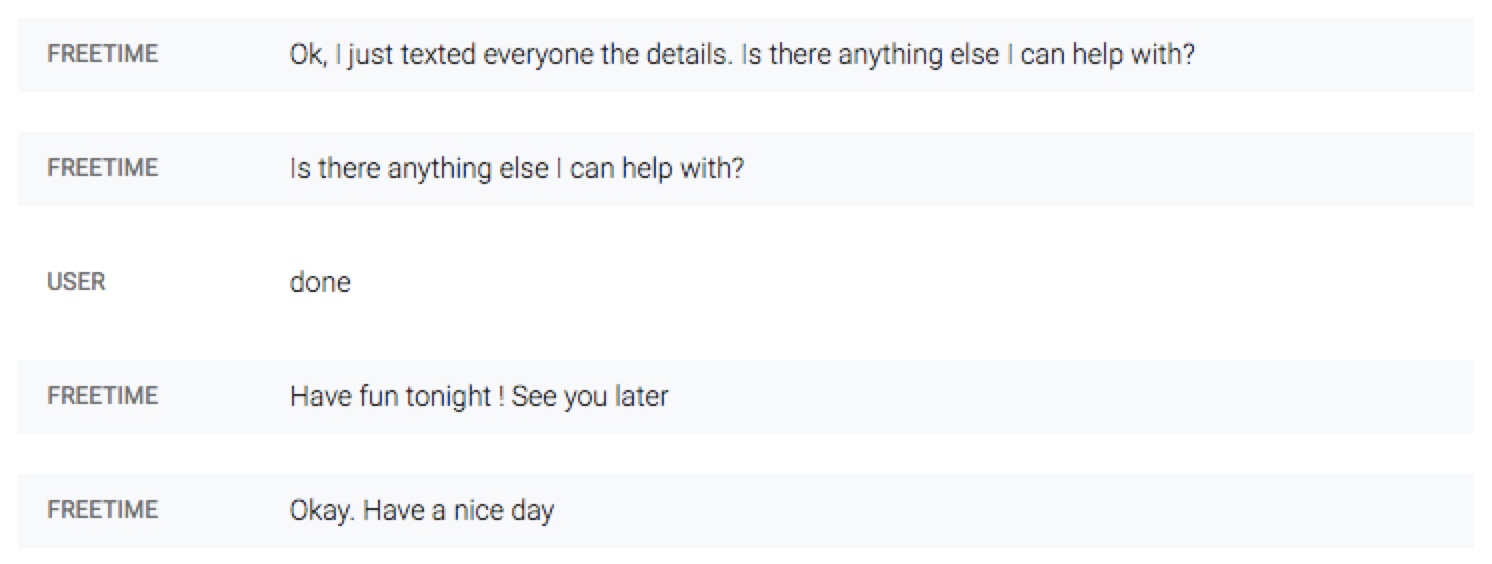

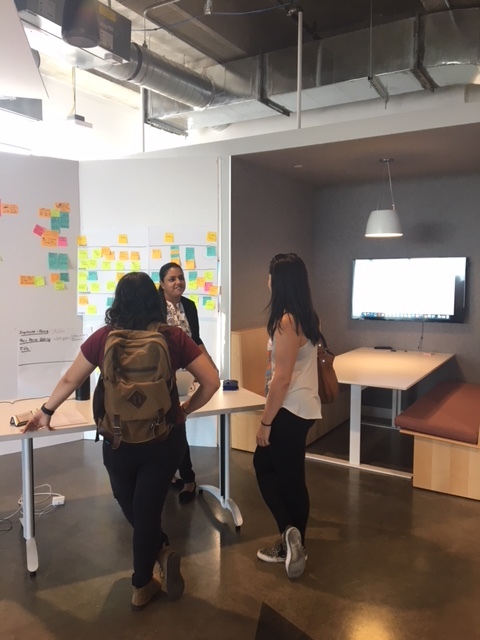

We each identified and interviewed 2 target users. All of the participants were between the ages of 24–40, who considered themselves to be tech savvy iPhone users & had heard of or used a VUI before. The interview and observation session (above) helped in the following ways :

We then used affinity mapping to group these key insights together and identify recurring patterns.

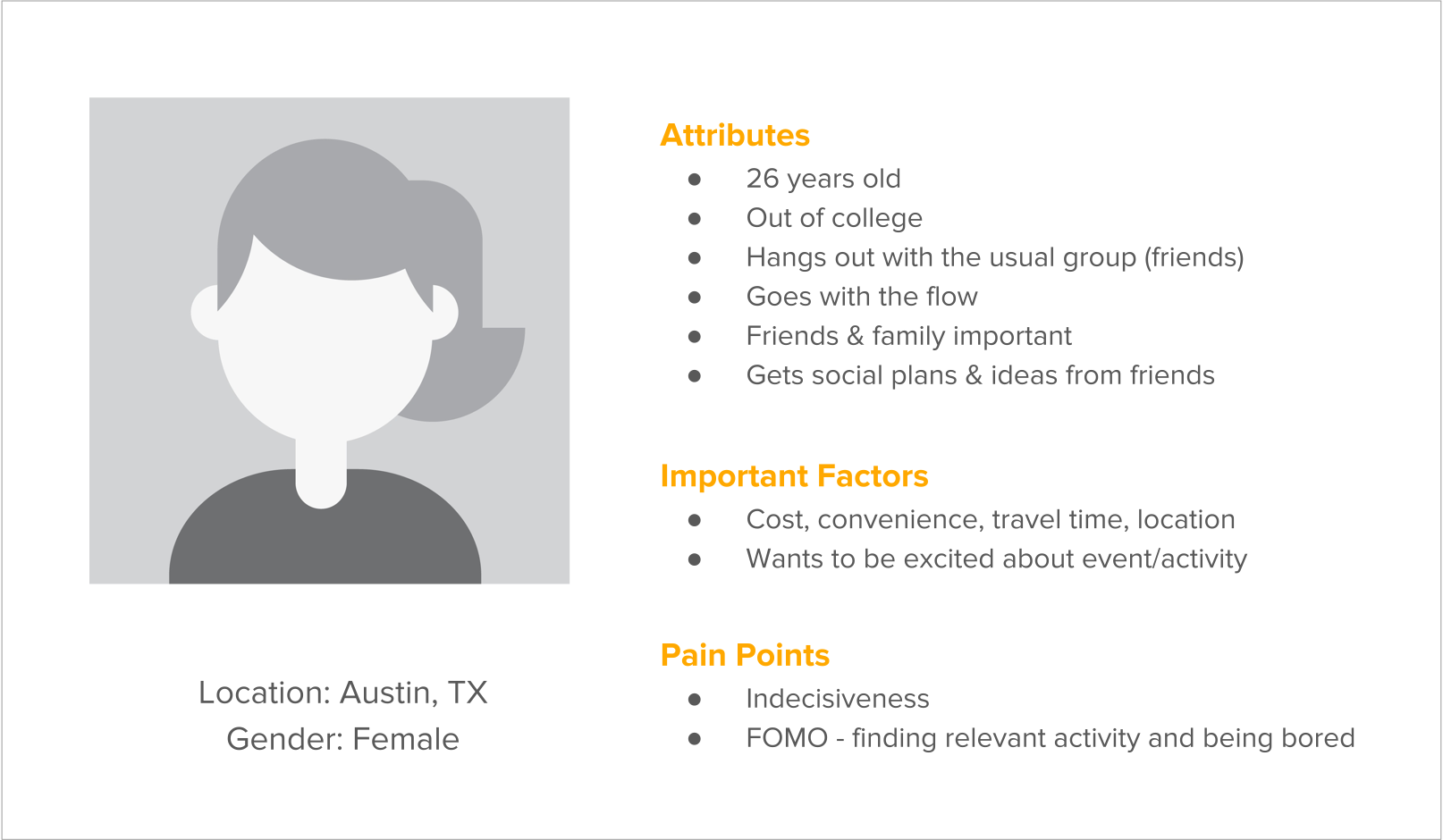

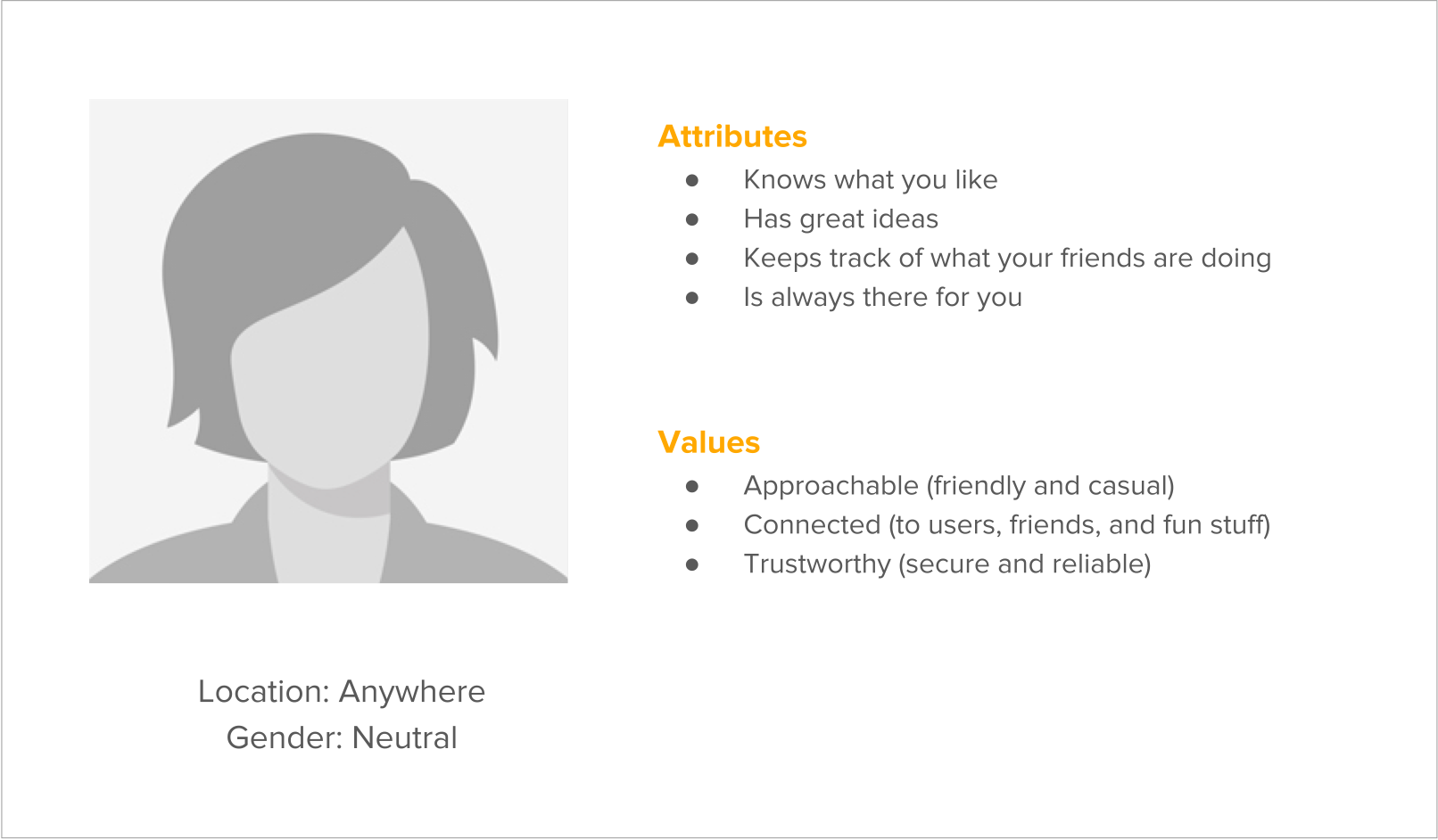

Based on the types of users we interviewed and the insights uncovered we created a user persona to drive design decisions moving forward.

As a user, I want the voice assistant to recommend fun things, I would like to do over the weekend, so that I can stay updated about what’s happening in town & have a great time with friends

- JEN

Building a user story for Jen helped inform User Requirements, Content Strategy and most importantly directed us towards the need for developing a persona for the VUI as well.

Conversational Design Strategy helped discover the need for a VUI Persona

During the interviews, many users expressed anger at a cold computer that is afterall pretending. And because voice is such a personal marker of an individual’s social identity, the stakes are alway high: users of poorly designed VUIs reported feeling “foolish,” “silly,” and “manipulated” by technology. Thus it was important for use to estabilish core attributes and values for Freetime at this stage of the design process

Imagine . . . You just got off work. You want to find something fun to do with friends. But you don't want to drive all over town without a plan or spend a lot of money. Just ask Freetime.

Freetime is a Voice UI that recommends nearby activities and eliminates manual searching. Users get a VUI that is contextually aware with up-to-the-minute information.

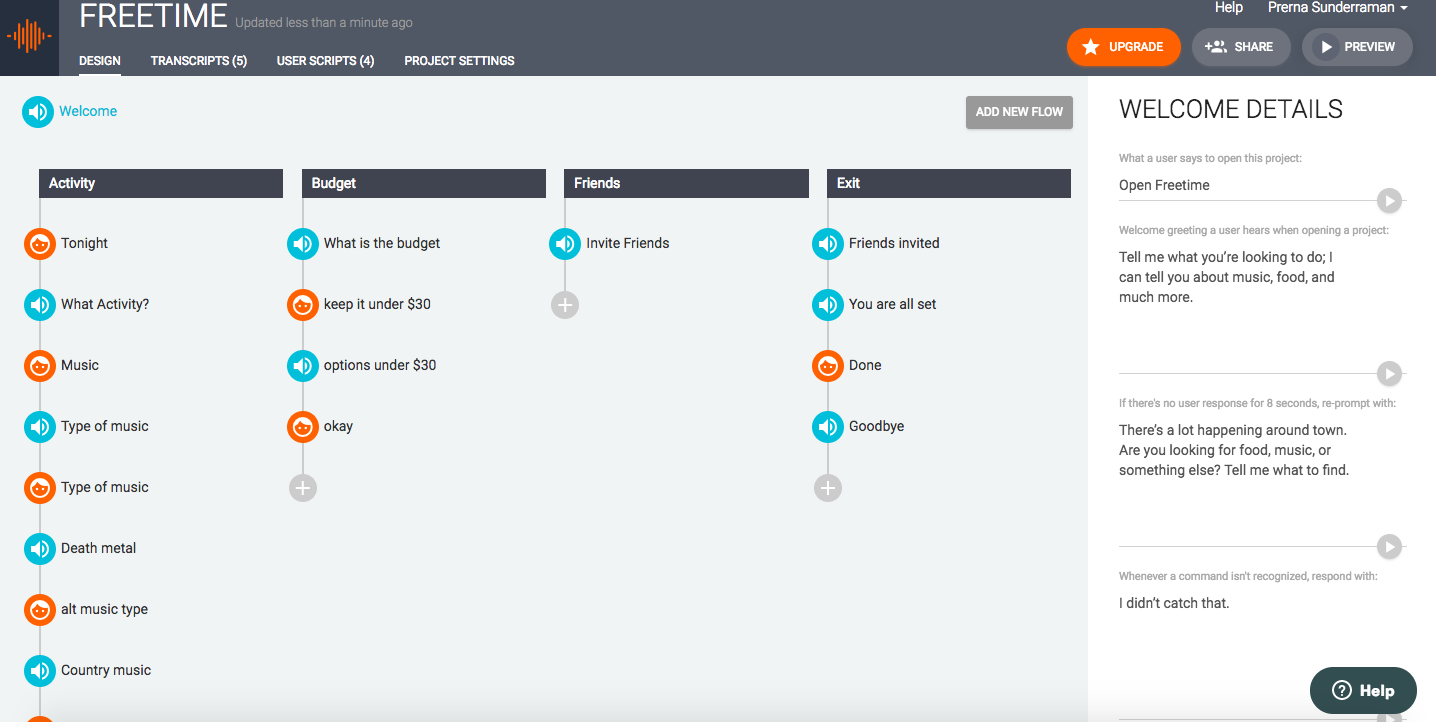

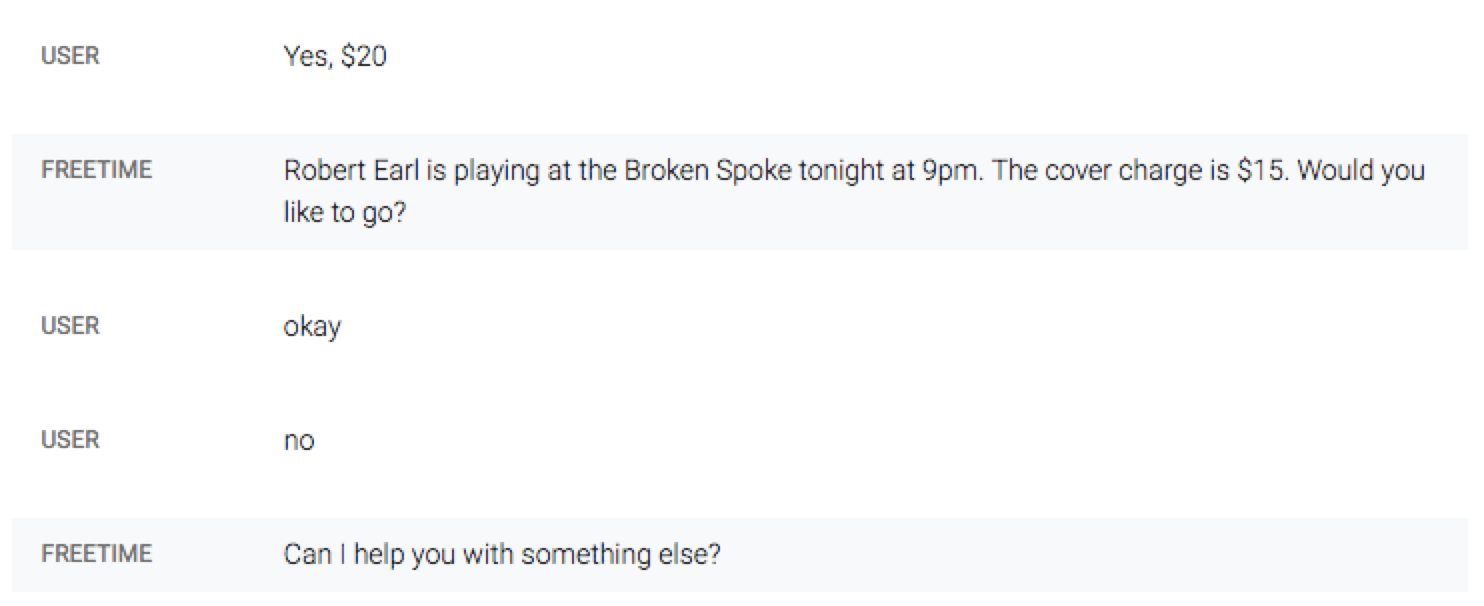

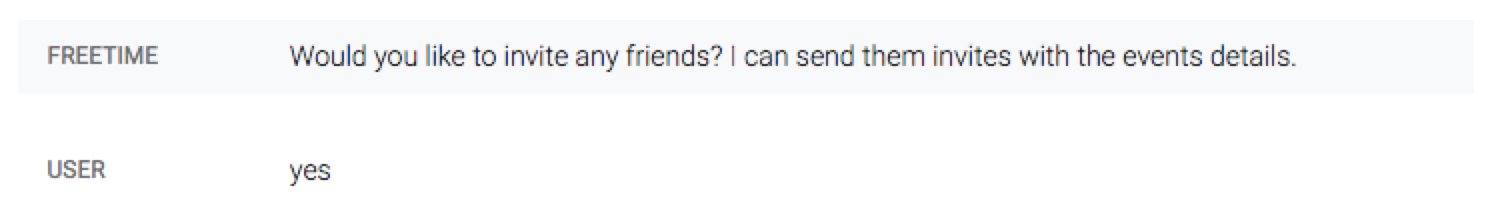

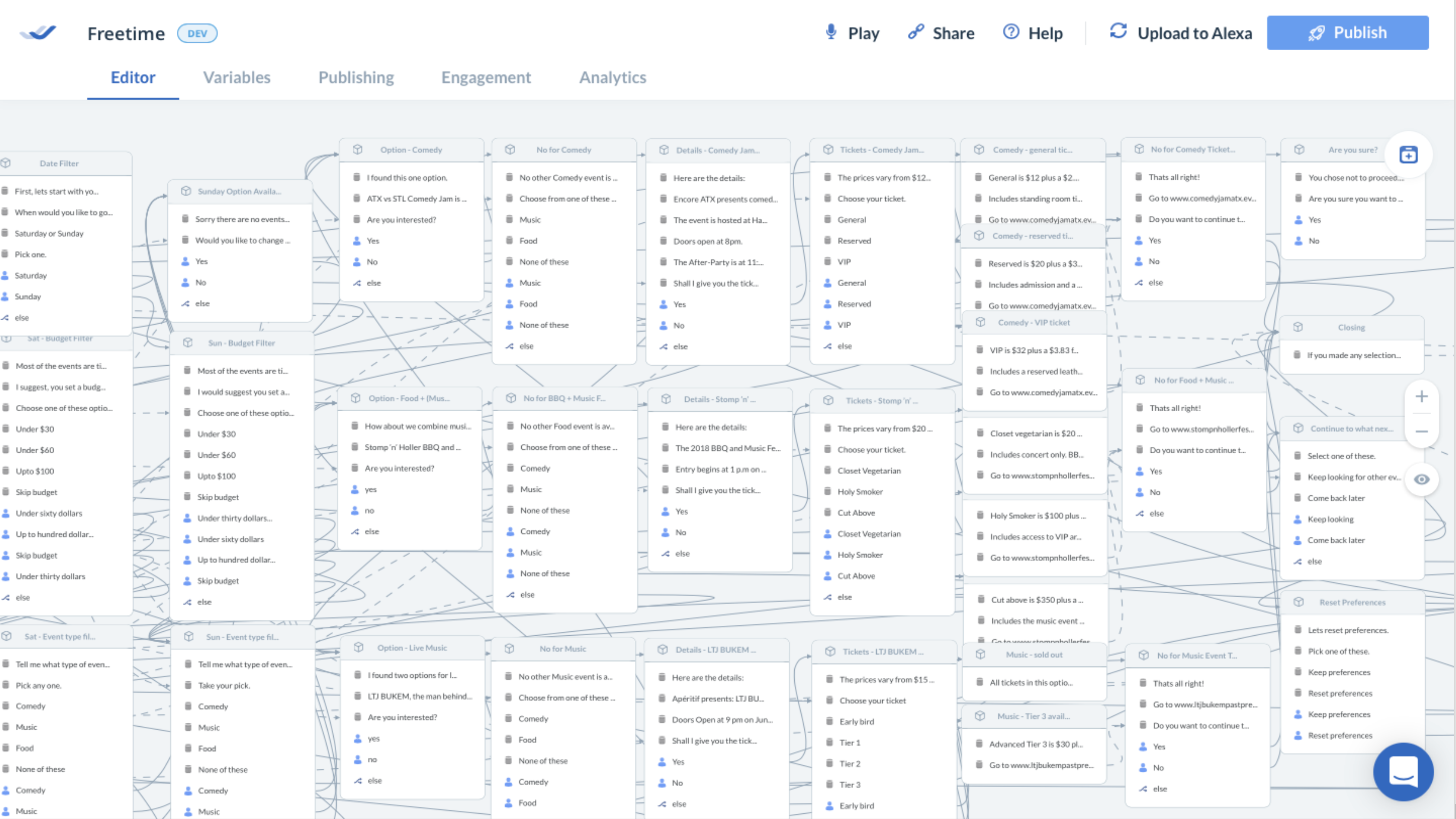

Choosing a platform for building the information flow was crucial as that provided me the tools to build a successful prototype for usability studies. As a lead interaction designer and prototyper, my responsibilities included deciding intents & objects, managing databases, creating conversation flows and most importantly bringing the VUI prototype to life within within a tight deadline of 3 days.

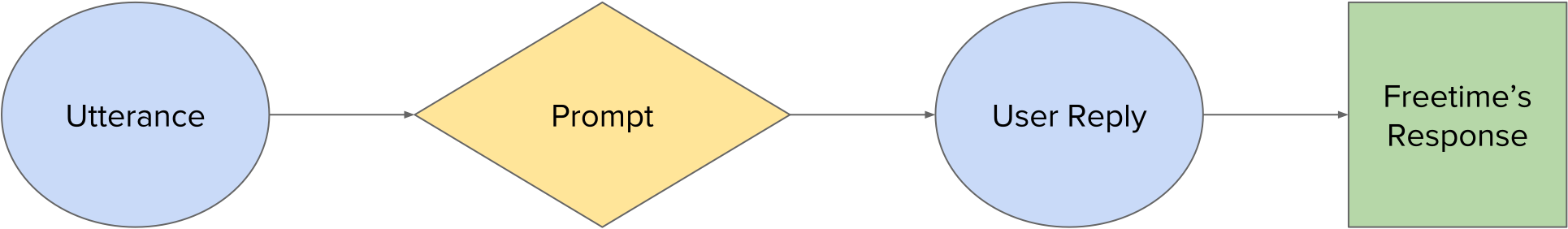

After considering various possibilities like, role playing the chosen sceanrio, Wizard of Oz method (experiential mockup) and Echosim (an Alexa testing tool), I stumbled across Sayspring, an Amazon Alexa skill that helps create prototypes with task flows and not code. I chose to experiment with this tool and learnt it on the fly while creating the prototype itself. I mainly chose this platform due to its flexibility of developing an Alexa skill in a very short period of time given we only has 3 days before we had to begin testing. I started with a script in collaboration with the information architect and defined all of the utterances, intents, slots and prompts that were needed for each of the conversation flows.

Choosing an activity to do.

Determining a budget to filter options

Inviting friends through contact/favourite list

Closing session once all details are confirmed

We needed to not only learn how people would interact with our VUI but also ensure the feedback from this testing gave us insight into things that we had overlooked, since the way all user frame a question for the same context every differently. It was important for me to not only include these different possibilities into the flows but also make sure all the keywords there for Freetime to recognize and keep the conversation from going into an error loop.

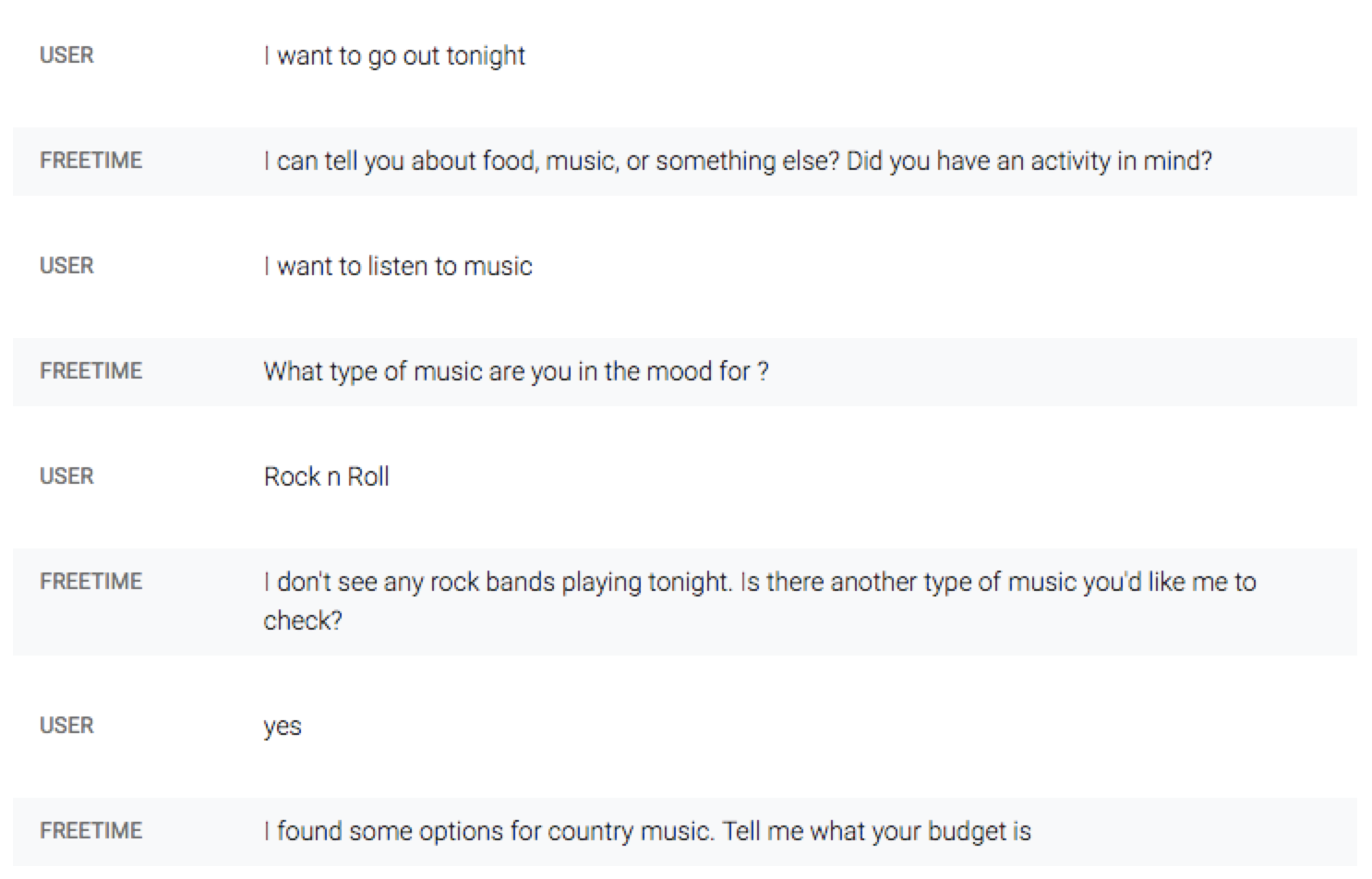

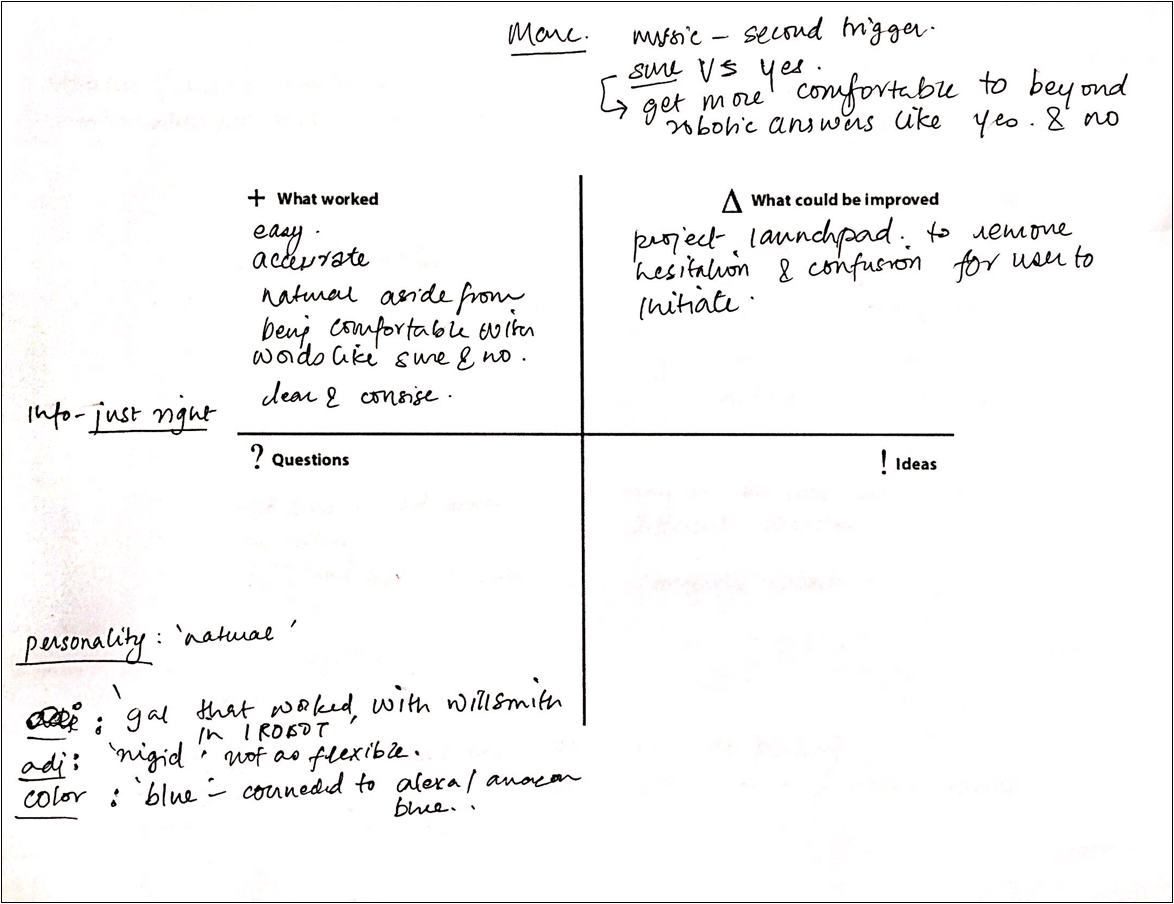

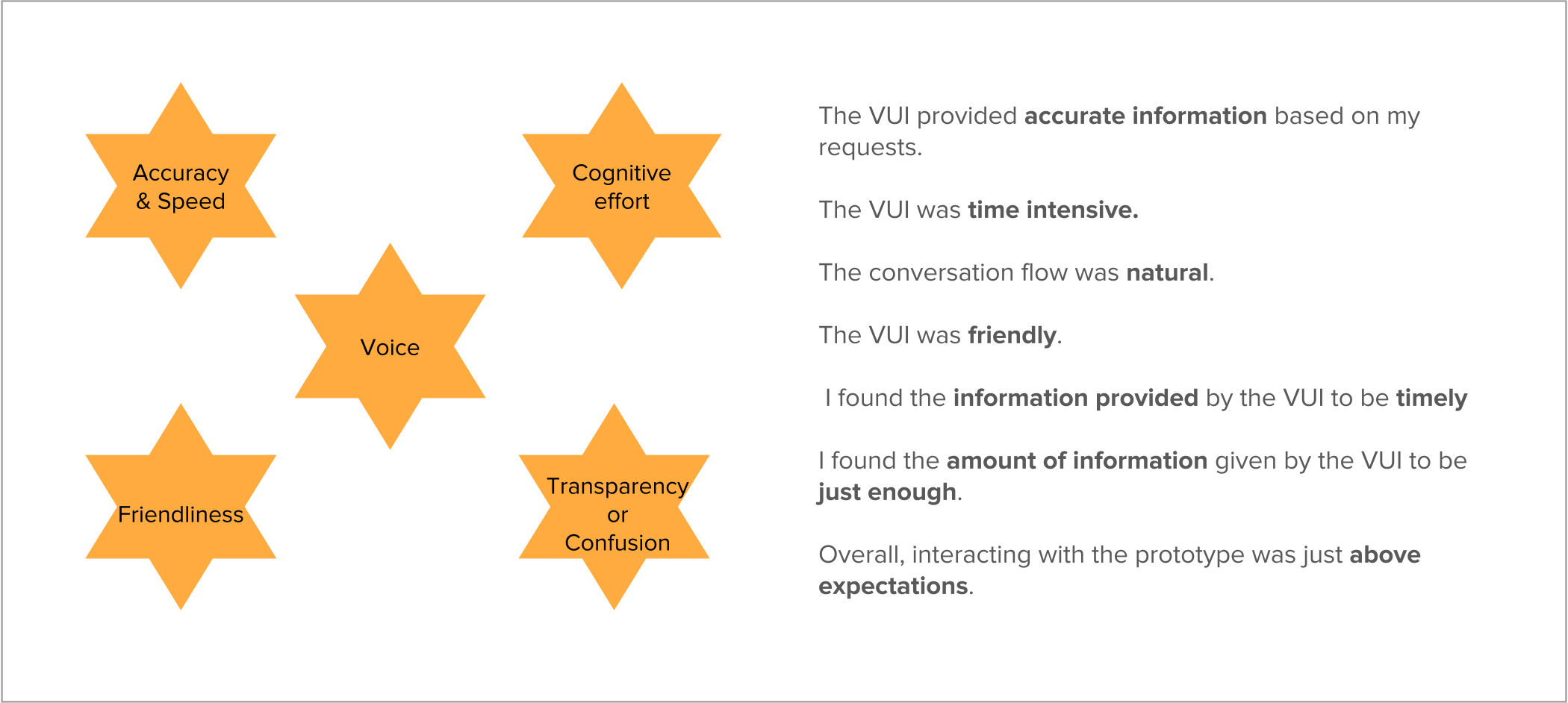

A test script was created based on the scenario developed. The participants interacted with the prototype and were asked to respond to both open ended questions and rate certain aspects between 0-7 Likert scale. 1 moderator & 2 observers (including me) captured session using photos, video and feedback grids.

Rated app neutral on interacting because only one option was offered and wanted to be told there was only one option.

Found the app to be accurate, not time intensive. Conversation was natural aside from when he changed the response. Fairly friendly, clear with just the right amount of information given the scenario. Overall it was what he’d expect.

The following insights gathered are based on observations and follow-up Qs asked during the testing sessions

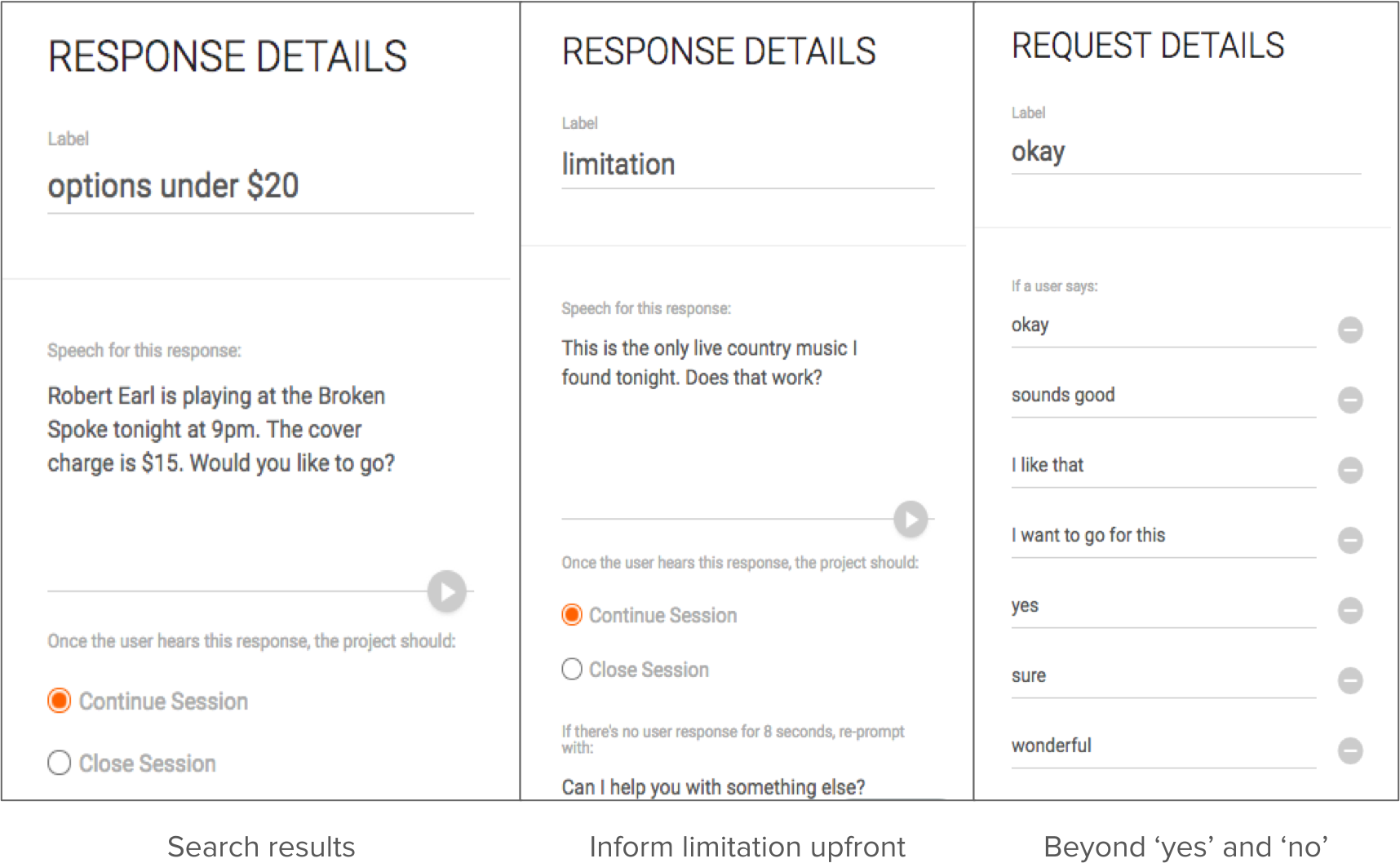

The overall voice vocabulary was edited based on user feedback for improving conversation flows.

For example, in the ‘search result’ flow above, if the user says no, to the option given by Freetime, it would continue the session onto a limitation flow, where it informs the user that it is the only option available instead of repeating itself into a loop like it did during testing.

Depending on the answer Freetime then continues to direct the user towards either giving driving details to the event or closing the session.

We also uncovered that not all users restrict their answers to a formal yes and no. As they go deeper into the conversations with the VUI, they start talking to it like they would with a friend, giving more elaborate answers than just a yes or a no. This is why I had to go back and include more options like, ‘I want to go’, ‘I’d like that’ etc. to user response slots in the prototype.

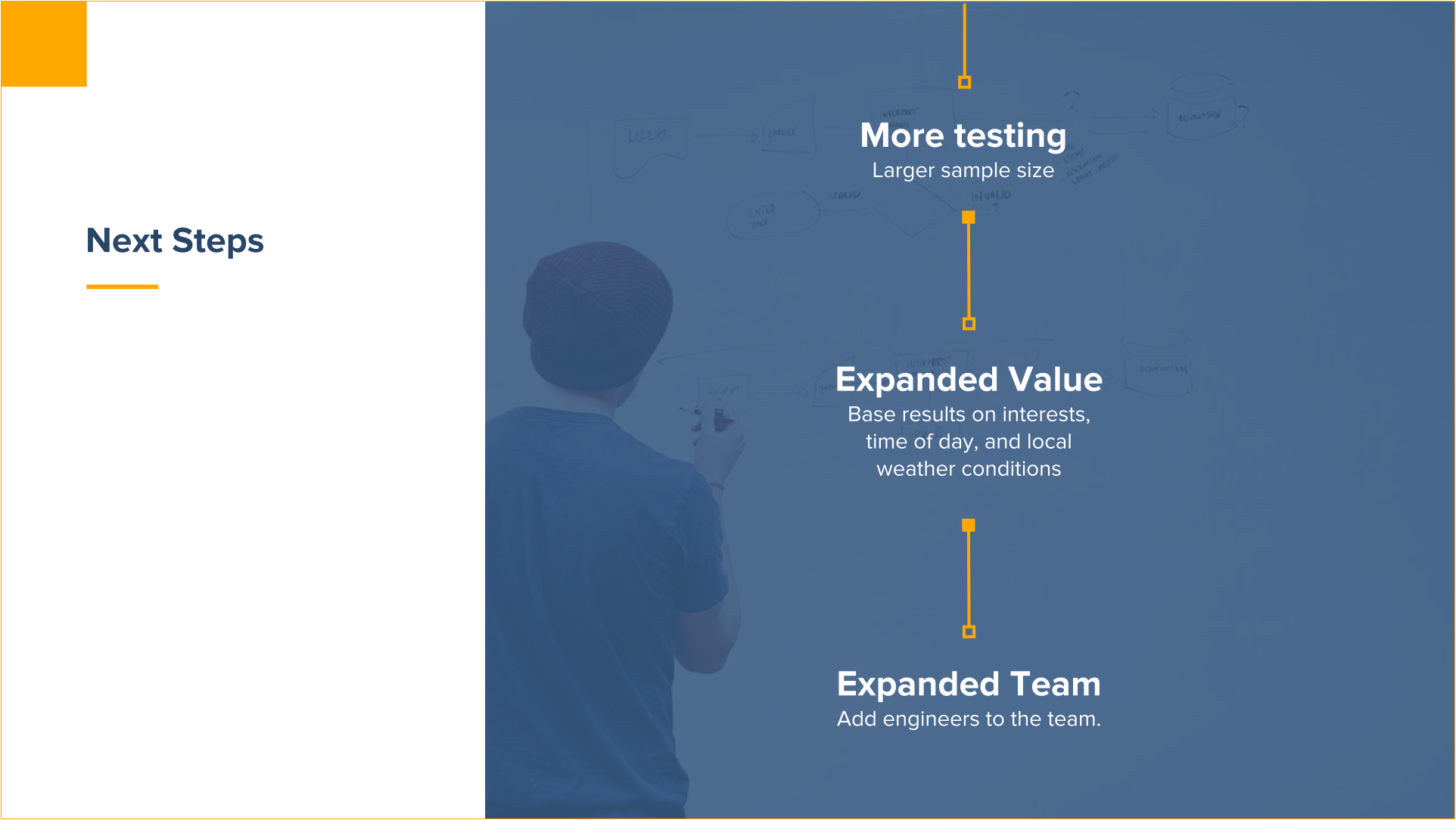

If this project was to move forward, we as a team would need to plan the following (image:right) :

For the prototype, I definitely need to conduct more tests with different types of users (extreme, expert, non-user), in order to get more specific insights into each task and interaction flow.

Also since VUIs now have a face, like the Echo Show, Echo Spot, the Alexa app etc. building the interface as a screen is also very important. This would also help make the service design universal.

We live in exciting times where advances in Artificial intelligence and Natural Language processing are powering products and giving rise to new models of interaction. This opens up the potential for conversational UX to become the de-facto mode for interacting with products.

No matter what your method, feedback will help me learn what a good conversation looks like for a chatbot. This isn’t something that was only important when I was first starting out, but is going to be a continuous process to keep refining Freetime or any other chatbot I design for in the future.

I know I’m still learning new things all the time!

I found myself in need of learning more Conversational UI as I went in deeper to refine Freetime beyond the constraints of the prototyping tool I was using. It was during this time that my professor and mentor Molly McClurg pointed me in the direction of the Austin Chat UX Meetup that was launching within a month. This meetup gave me the opportunity to not only connect with industry experts but also showcase Freetime.

I continued building a more robust prototype using Storyline for the Austin UX Open House meetup demo on 30th May, 2018

This meetup also connected to Voxable's workshop that is an end-to-end understanding of how to design content for conversational user interfaces

Back to Top